When you’re on the hunt for a data science job, you’ll need an updated resume. But just as important is a strong portfolio.

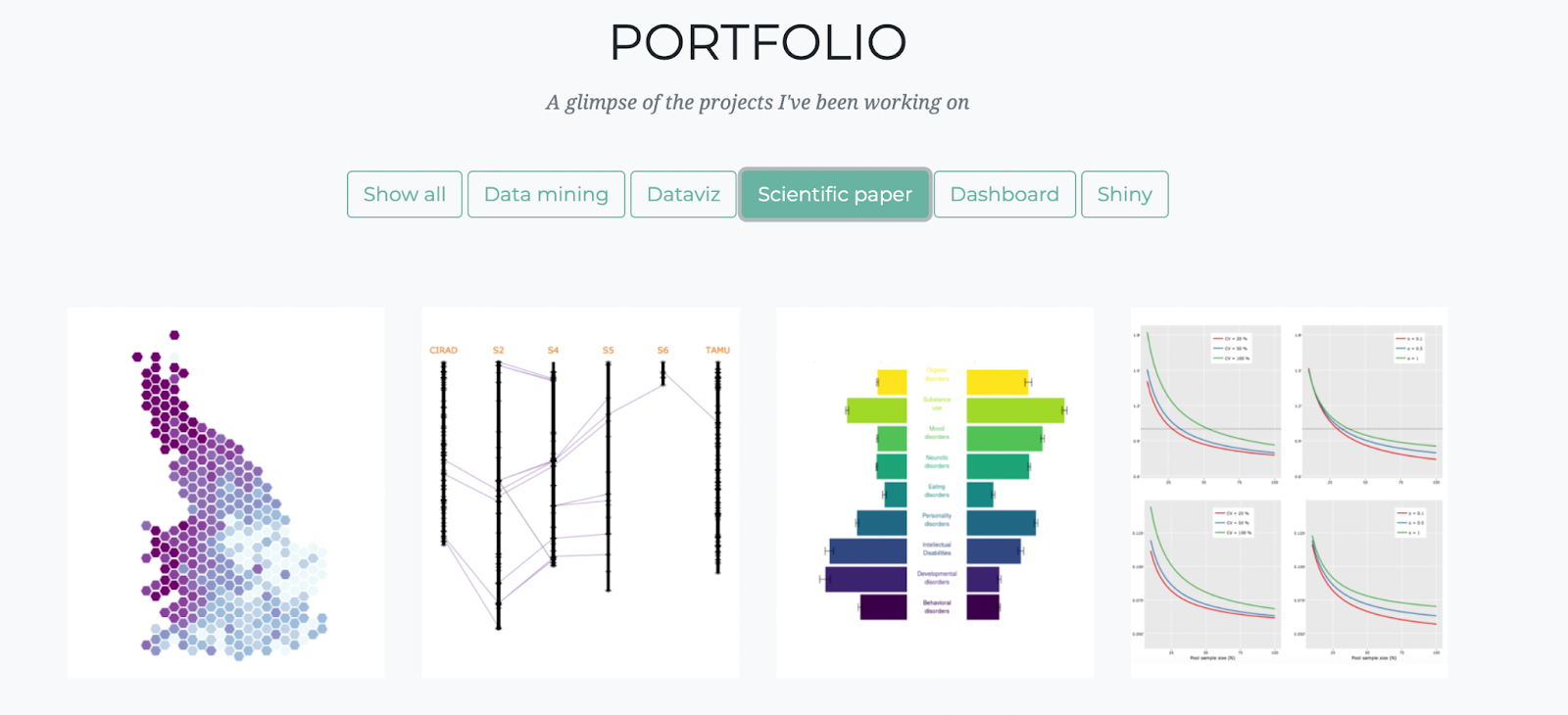

An impressive data science portfolio, like any other portfolio, typically consists of a collection of projects that showcase your expertise, skills, and problem-solving capabilities in the field of data science. It should show a balance of your technical prowess and your statistical sophistication.

So how do you design one, what should you emphasize, most importantly, how do you impress hiring managers? Keep reading to learn nine easy-to-follow steps to create an effective data science portfolio.

Step 1: Diversify your projects

To create a robust portfolio, include a diverse range of Data Science projects. Diversity demonstrates your versatility as a data scientist. Strive for a mix of projects with varying complexity to showcase your ability to tackle different challenges. If you don't have enough experience, include projects completed during your studies or externships in your portfolio.

Here are some a few good projects to include in your Data Science portfolio:

- Data Analysis projects: These projects involve exploring and analyzing datasets, extracting valuable insights, and presenting the findings in a clear and meaningful way.

- Machine Learning projects: Showcase your ability to build predictive models and algorithms using machine learning techniques on various datasets.

- Data Visualization projects: Demonstrating your skills in creating visually appealing and informative data visualizations that help understand complex trends and patterns.

- Data Cleaning and Preprocessing projects: Highlight your proficiency in handling real-world data by cleaning, transforming, and preprocessing it to make it suitable for analysis.

- Natural Language Processing (NLP) projects: If applicable, showcasing projects that involve working with text data, sentiment analysis, or language translation. Be sure to mention any work you’ve done with LLM (large language models) and ChatGPT.

- Deep Learning projects: If you have experience with deep learning, including projects that demonstrate the application of neural networks for image recognition, natural language processing, or other tasks.

- Web Scraping projects: If you have expertise in web scraping, include projects that involve extracting data from websites for analysis.

- Personal projects: Besides academic or tutorial projects, adding personal projects that reflect your passion for Data Science can be valuable.

Step 2: Work with real-world data

Whenever possible, use real-world datasets for your projects. Employers value data scientists who can handle messy and incomplete data effectively. Showcasing your skills in data cleaning, preprocessing, and transformation will set you apart from candidates who rely solely on clean and pre-processed datasets.

Through his coursework at TripleTen, Jacques Diambra got an externship at Yachay AI, where he used data to build a model that was predicting where someone was tweeting, mostly for disaster relief and other such efforts. He was able to present that real-world data into his interview. ““Before TripleTen, I had initial presentations that were just Excel,” says Jacques. “I went back and applied all the skills from the bootcamp to revamp my presentations. I created a website, I had data analyses, I had data science behind it. I connected to the Fed interest rate APIs, and built an interactive website.”

Step 3: Focus on quality over quantity

While having numerous projects in your portfolio may seem tempting, it’s essential to prioritize quality over quantity. Aim to create a few well-executed projects with thorough documentation and organized code. Employers will appreciate the depth of your work rather than the sheer number of projects.

Step 4: Leverage version control and hosting platforms

Use version control systems like Git to track changes and collaborate on your projects. Host your portfolio on platforms like GitHub to showcase your coding skills, allow easy access to your work, and demonstrate your project history.

Step 5: Bring details to life through data visualization

Data visualization is a powerful tool for communicating insights. Create visually appealing and informative charts, graphs, and dashboards to present your findings effectively. Use libraries like Matplotlib, Seaborn, or Plotly to enhance the visual impact of your data. Visualizations are particularly important for projects where the target audience is less technical — let the data tell a story!

.png)

Step 6: Implement machine learning models

Use the skills you’ve learned to actually help build your portfolio. Incorporate machine learning algorithms into your projects to demonstrate your predictive modeling skills. Choose appropriate models, apply hyperparameter tuning, and interpret the results — these details are what a technical interviewer will dig into. Highlighting your ability to build accurate and practical models will impress potential employers.

.png)

Step 7: Include a description

The first time someone sees your portfolio, you might not be there to explain each project. It’s important that you have descriptions so reviewers can learn more. Include a detailed explanation of the problem statement, the approach you took, the methodologies employed, and the results you obtained. This will help viewers understand your thought process and the insights you gained from each project.

Step 8: Seek feedback and collaboration

Share your portfolio with peers, mentors, and online communities to gather constructive feedback. Engaging in open-source collaborations and contributing to data science projects can also enhance your portfolio and demonstrate your collaboration skills.

Step 9: Continuously refresh your portfolio

Your data science portfolio should be a reflection of your continuous improvement and growth as a data scientist. Regularly update and refine your projects as you gain more experience and work on new endeavors. Stay up-to-date with the latest trends and technologies in the field.

Want more help to build your data science portfolio?

Data Science continues experiencing rapid growth, with the U.S. Bureau of Labor Statistics projecting a 35% increase in Data Scientist jobs in the next 10 years.

However, in such a complex profession, it’s essential to make a strong first impression and convey your knowledge and experience. TripleTen bootcamps empower our students to build an impressive portfolio by providing a comprehensive and hands-on learning experience. You’ll leave with tangible results, a new skillset, and an impressive portfolio full of projects. Sign up for one of our bootcamps and get started today.

.avif)